Adobe launched their generative AI modelling system, Firefly, at the recent Adobe Max. It certainly caused a stir among creatives, who seem equally excited and dismayed about the new tool. As it is a tool that I can leverage, and as I am on the VIP list and have early access, I jumped in for a look.

The system allows people to generate entire compositions from nothing with a simple text prompt. Type in, for example, ‘waterfall running to a river with mountains’, and a few seconds later the system will present a number of designs to choose from. These can be refreshed, if you don’t like the offerings and want to try again. So far, so similar to other offerings.

What is different about Firefly is that it is aimed squarely at creatives. Those interested in making actual real-world designs rather than images for social media. One example of this is the text engine.

Firefly Text Engine

This innovative system allows the user to select from a (currently) limited range of fonts, then select colours, and whether the resulting AI output should be a tight, medium, or loose fit. This governs how closely the AI stays within the font outline. Users enter the text they want to apply the AI to… and Firefly uses the same free text generation engine to build unique variations from the selected criteria. Let me explain.

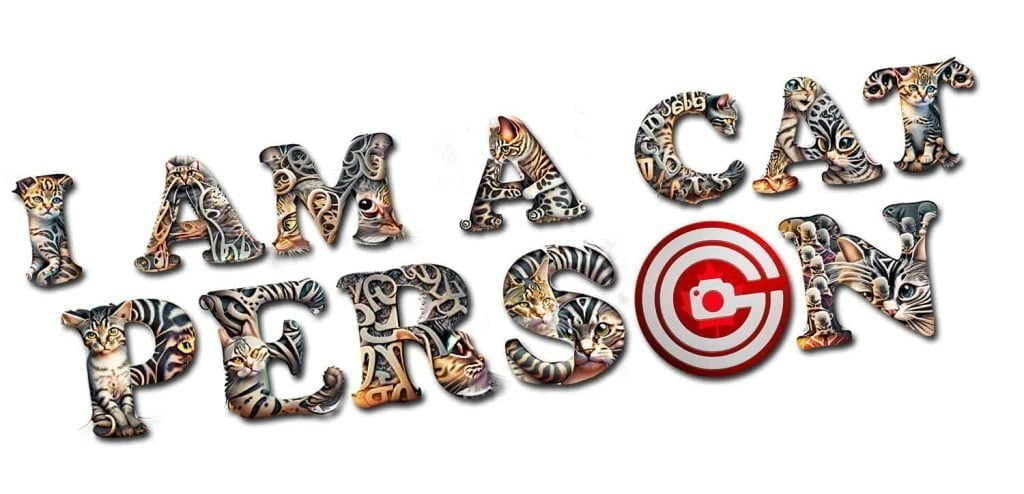

I chose a heavy font, in black. Used the words ‘I am a cat person’ as the input text, then the words ‘cat person’ as my AI input. This was a test and I was clearly not overthinking at this stage. The output was rapid, and amazing.

Firefly is still in Beta. Users can be considered beta testers, until the system is refined sufficiently for commercial use. At which time this may break off as a paid product, or be folded into the current Adobe Cloud subscription model. Adobe were avoiding any reference to this, or questions on the subject. It may be that they have not yet made a decision on the matter but I very much doubt that to be the case. They just don’t want to let the <ahem> cat out of the bag. Sorry, couldn’t resist.

Practical Use

After seeing what the Firefly text engine produced I downloaded the transparent PNG file and took it in to Photoshop for a little cleanup. Shaved a couple rough edges (it is beta, that’s to be expected) and massaged some extra layers. To discourage troll downloads I added my logo in place of the ‘O’, and set the design into a T-shirt mockup I keep to hand for use when making real T-shirts. It looked so good, I wanted to show it off. So here it is.

The AI does a phenomenal job. I can see it being an inspiration for those days when you just can’t think. No more ‘artists block’. Typing a few words generates more ideas than will ever be needed. Which is where the critics believe this and other AI systems will do artists out of work. Which is, to be fair, a valid concern. What client wants to pay a designer when they can get a great design themselves just by typing a few words?

Clients on a budget may make the call that it’s ‘good enough’ if Firefly can save them hiring someone. The output quality will continue to improve, removing much of the need for someone that knows Photoshop. But.

What Firefly does NOT offer (at least, not yet) is the ability to use any of your own content – logos, headshots of the CEO, product photos, etc.. Cometh that day, I will be more concerned. Until then, there is a place for designers. For real world projects, particularly those commercially driven ones. Firefly is another amazing AI that I believe can best be thought of as a springboard for creativity. Once it has done what it does, the designer finishes the job. To spec, on time, in budget. Adobe Firefly is a tool, like any other.

And, so far at least, this is what it can do with minimal input. Pretty good. But it still needs me to feed the cat.